Lifeway's Event Driven Architecture

How Lifeway uses Event Driven Architecture to scale its eCommerce platform and rapidly ship new features.

Back in 2016, Lifeway took on the HUGE challenge of re-architecting it's entire backend eCommerce platform. We went from a monolithic, WebSphere-based system to an event-driven, microservices approach with Apache Kafka and Kubernetes as the backbone of our enterprise. The path to get there wasn't perfect and we made many mistakes in the process, but the end result has set our development teams up for success in the long haul. In this post, we'll take a retrospective look at the architecture we implemented, the challenges we faced during implementation, how it has affected our development teams today, and what Lifeway is prepping for in the future.

The Architecture

At its core, this initiative was about:

Resiliency

By leveraging asynchronous events for integrations between business domains, we projected that the overall resiliency of our platforms would be significantly improved.

Scalability

By embracing the cloud and migrating off of our own data center, we expected to be able to scale to business needs and our customer volume more flexibly.

Elimination of Tight Coupling

By splitting capabilities out into microservices, the expectation was that business domains would no longer be tightly coupled - limiting impact to customers in production incidents and allowing for more graceful fault tolerance.

Evolutionary Architecture

With common interfaces and design paradigms in place, we expected to be able to easily iterate on top of this architecture. Any new large scale feature should be able to be distilled down into its smallest units as Events, and implemented with agility and minimal disruption.

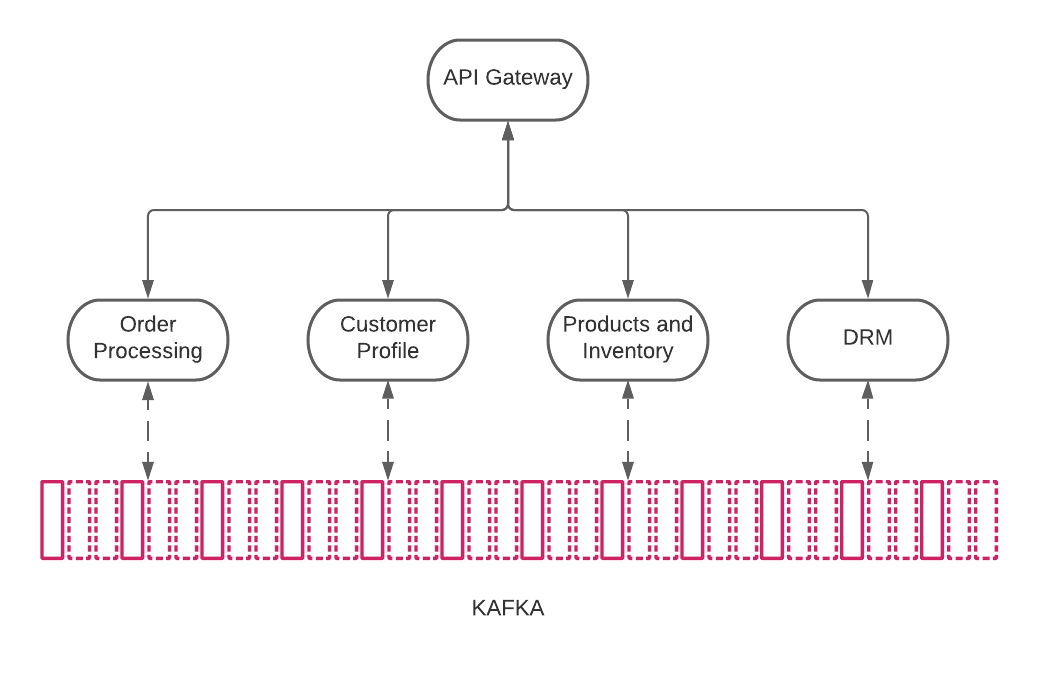

As pictured, we separated previously coupled domains of our business, hosted all on one centralized infrastructure piece, into logical components that are separately managed. Kafka was then introduced as an asynchronous way for these domains to communicate. With the introduction of Kafka, we established an "Enterprise Event Envelope". In essence, this is a contract that all applications had to adhere to when publishing and consuming events to/from other domains.

{

"id": "4118ca5c-2d28-4ebd-90ca-66eb2ede7314",

"timestamp": "2021-02-27T16:31:23.885Z",

"version": 1,

"payload": {

"id": "b9b1b8eb-a9a5-4880-a444-fd26a8cda03e",

"dateCreated": "2021-02-27T16:31:23.885Z",

"dateUpdated": "2021-02-27T16:31:23.885Z",

"firstName": "Leeroy",

"lastName": "Jenkins",

"gender": "MALE"

},

"eventType": "PersonCreated",

"domain": "Customer"

}

The example event above demonstrates this event envelope. Using this, applications have a standard way to communicate with necessary routing information at the header level and application-specific data at the payload level.

The most important aspect of these "events" is the eventType and the payload. With this, we can adequately communicate when something in our business process has occurred so that other systems can consume and process based on its domain context. In the example above we have a PersonCreated event indicating that a new customer was created either through registration or other means.

Within this paradigm, all of our solutions have a standard way to propagate information out to the Enterprise.

The Struggle

Unsurprisingly, goals as lofty as this came with some pretty serious growing pains. Most notably these included:

Complexity of Microservices

When we broke out our capabilities into individual components, naturally the complexity of our systems and infrastructure grew immensely. In the early stages, we struggled with build pipelines, deployment strategies, and sometimes even struggled to adequately debug issues. As time went on, we used things like K8s, log aggregators, and re-usable pipelines to help simplify this. We've also had to stress the importance of internal development documentation.

Latency

As we introduced more components, redundant API Gateway layers, and features, we noticed that our original architecture introduced unnecessary latency for the end-user. As we learned this, we have slowly been refactoring domains to eliminate these redundant API Gateway layers and reworking features to reduce latency where possible.

Datacenter Dependencies

While we moved the bulk of our enterprise off of a monolithic architecture, we still maintained the fulfillment of our orders, accounting, and general ledger through our legacy Oracle implementation. As a result, we had a networking dependency on our cloud infrastructure being able to connect our CoLo Datacenter via AWS Direct Connect. We mitigated this by isolating the connected components with Kafka, their specific failover routines, and by adding redundancy to the direct connect implementation.

The Gains

As we ironed out these growing pains, these architecture decisions have had a drastic impact on culture and capabilities for our development teams, and not just in how it has improved our systems. We have a homogenous interface for all solutions which enables them to operate independently (not restricting or limiting tooling) and increase speed according to their team's strengths. For example, we have teams using the JVM (Scala, Kotlin, and Java), while other teams are using Node.js, Typescript, and Javascript exclusively. The modularity of this architecture encourages diversity amongst our Dev teams and has resulted in some remarkable innovation.

In addition to this, we have incredible flexibility as our business at Lifeway is looking to evolve to support a more digital church. Some examples include:

The Future

We have laid a great foundation for future development; so it's reasonable to ask: What is Lifeway looking to improve architecturally over the next 2 years? We never want to stop innovating, never want to stop refactoring, and always want to have our finger on the pulse of emerging technologies and architectures at Lifeway.

With this in mind, Lifeway is in the process of evaluating the use of Headless Content Management at Enterprise scale as we have identified more and more large scale e-commerce companies trending in this direction in concordance with the MACH Alliance movement. We have the platform necessary from an e-commerce experience, now we need to shore up the way our content and customer journeys are delivered on the front end.